One more way to optimize the use of an index is overriding the default _id field.

Also, each index will occupy some space and memory as well so, number of indexes can lead to storage-related problems.

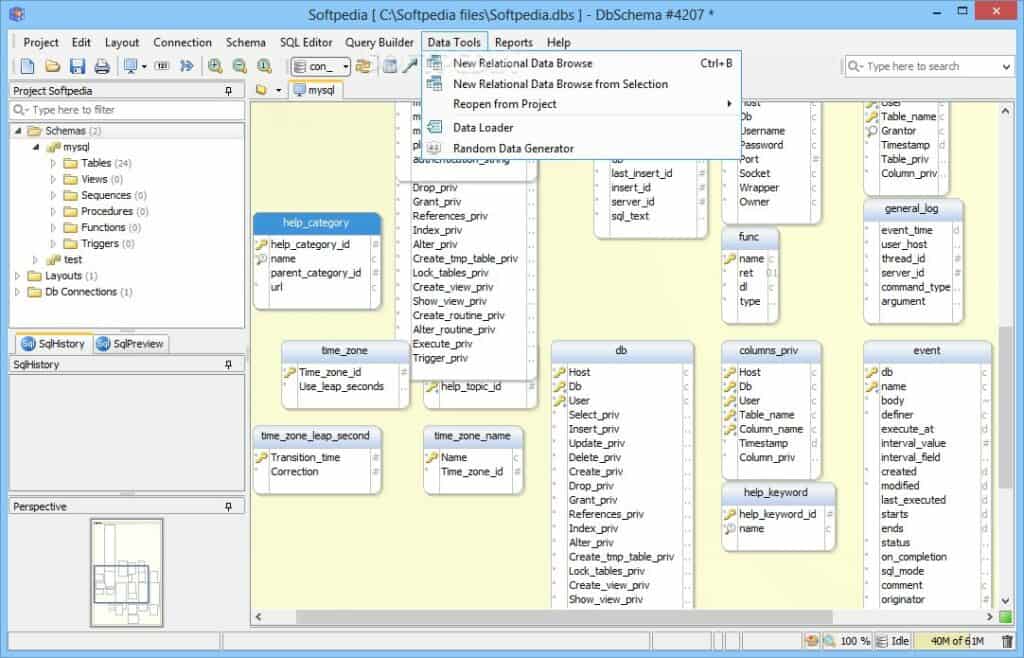

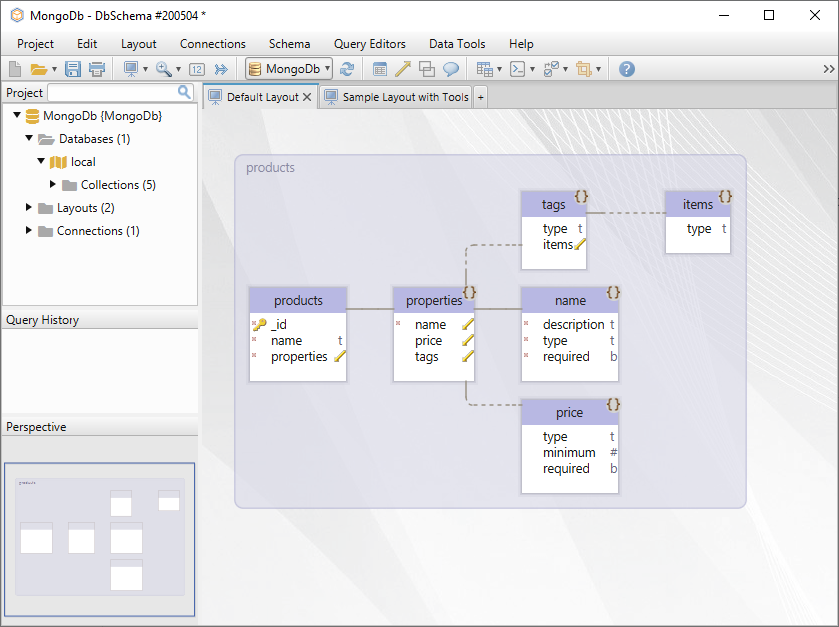

#DBSCHEMA MONGODB DESIGN UPDATE#

Each index you add in the database, you have to update all these indexes while updating documents in the collection. Having discussed adding indexes, it is also important not to add unnecessary indexes. If MongoDB hits that limit then it may either produce an error or return an empty set. There is a memory limit of 32MB of the total size of all documents which are involved in the sort operation. If the index on sorting field is not available, MongoDB is forced to sort without an index. Even though you apply to sort in the last stage of a pipeline, you still need an index to cover the sort. While doing searching or aggregations, one often sorts data. You can use embedded documents to get all the required data in a single query call. As a solution for this scenario, if your application heavily relies on joins then denormalizing schema makes more sense. This will obviously require more time as it involves the network. If you are retrieving data from multiple collections and joining a large amount of data, you have to call DB several times to get all the necessary data. Therefore, we have to get all the data from DB and then perform join at the application level. Try to Avoid Application-Level JoinsĪs we all know, MongoDB doesn’t support server level joins. This will trigger an in-place update in memory, hence improved performance. Instead of updating the whole document, you can use field modifiers to update only specific fields in the documents.

This can drastically degrade the write performance of your database. If you try to update the whole document, MongoDB will rewrite the whole document elsewhere in the memory. In case, your application needs to store documents of size more than 16 MB then you can consider using MongoDB GridFS API. You can use document buckets or document pre-allocation techniques to avoid this situation. It can lead to failure of queries sometimes. If your document size increases more than 16 MB over a period of time then, it is a sign of bad schema design. By default, MongoDB allows 16MB size per document. If your schema allows creating documents which grow in size continuously then you should take steps to avoid this because it can lead to degradation of DB and disk IO performance. Here are some points which you can consider while designing your schema. In this article, I will discuss some general tips for planning your MongoDB schema.įiguring out the best schema design which suits your application may become tedious sometimes. In short, “Schemaless” doesn’t mean you don’t need to design your schema. This is beneficial for the initial stages of development but in the later stages, you may want to enforce some schema validation while inserting new documents for better performance and scalability. Normally, MongoDB stores documents in a JSON format so each document can store various kinds of schema/structure. This means that MongoDB does not impose any schema on any documents stored inside a collection.

#DBSCHEMA MONGODB DESIGN MANUAL#

If you're getting to the point where either you need to do a bunch of joins or a lot of manual management of denormalized data in order to satisfy your queries, then you may need to rethink your schema or even your choice of DB.One of the most advertised features of MongoDB is its ability to be “schemaless”. If not, see if a little denormalization will help. You may wish to make a list of the queries you expect to perform, and see if they can be done with a minimum of joins with your current schema. Of course, every time you denormalize, you add complexity to your application which must now ensure that the data is consistent across multiple collections (e.g., when you change the server name, you have to make sure you change it in the server_logs, too). That's a fancy way of saying, you may wish to copy the name and userId fields into your server_log documents, so that your queries can avoid having to join with the server collection. OTOH, if your server_log queries always need to pull in data from the server collection as well (say for example the name and userId fields), then it might be worth selectively denormalizing that data. In general, you want to avoid using joins in Mongo (they're still possible, but if you're doing a bunch of joins, you're using it wrong, and really should use a relational DB :-)įor example, if most of your queries are on the server_log collection and only use the fields in that collection, then you'll be fine. Whether this is a good design or not will depend on your queries.

0 kommentar(er)

0 kommentar(er)